One of the key tasks during initialization of a Volt Active Data cluster is determining cluster topology. The topology includes placement of the partition masters and replicas on physical Volt Active Data nodes. Once determined, the topology does not change unless node failure or elastic scaling happen.

For some years, Volt Active Data used a simple algorithm for determining topology. It worked by round-robin assigning all partition replicas to different nodes until all sites on each node were assigned a partition. Partition masters were chosen in round-robin order so they spread out in the cluster. The algorithm was very simple to debug and served most early use cases well.

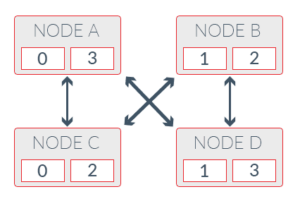

We updated the algorithm in Volt Active Data v3 to accommodate the change to transaction management. The updated algorithm made sure all partitions had k+1 replicas in the cluster. It also had optimization baked in to maximize the number of connections between cluster nodes by letting the sites on a node replicate partition masters on different nodes. We found that the OS-level socket processing became a bottleneck in some benchmarks because each Volt Active Data node kept only a single connection to each of the other nodes in the cluster. Splitting the traffic onto multiple sockets yielded better performance. The following diagrams help illustrate this optimization.

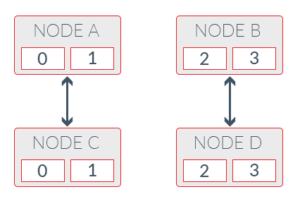

Image 1.1: The initial algorithm round-robin assigns partition replicas. In cases such as the example shown in the diagram, there are four nodes, with two sites on each node and a k-factor of 1. Four partitions are shown in this configuration: node C is a perfect replica of node A, and node D is a perfect replica of node B. This assignment does not work well with network-intensive applications because data is replicated over a single link within each node pair.

Image 1.1: The initial algorithm round-robin assigns partition replicas. In cases such as the example shown in the diagram, there are four nodes, with two sites on each node and a k-factor of 1. Four partitions are shown in this configuration: node C is a perfect replica of node A, and node D is a perfect replica of node B. This assignment does not work well with network-intensive applications because data is replicated over a single link within each node pair.

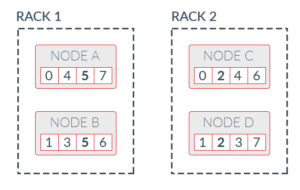

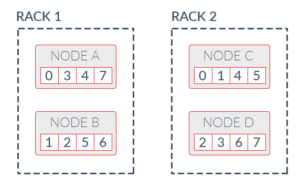

As Volt Active Data deployment cluster sizes became bigger, the need for a more sophisticated topology calculation algorithm became clear. Among requested features, the ability to distribute partition replicas based on the placement of nodes headed the list. (This is known as rack-awareness in other systems.)

Placing the partition replicas with rack-awareness prevents putting all partition replicas on the same rack, increasing the probability the cluster will remain available on node failures. Without this functionality, all nodes are equal when considering the next node to replicate a partition. If there are enough nodes on the same rack to satisfy the specified k-safety level, it is possible to inadvertently assign all replicas of a partition to nodes on the same rack.

Rack-awareness takes the placement of nodes into account when partition replicas are assigned. It uses a greedy algorithm to pick the next node, as far away from the partition master as possible. If a rack already has a replica of a partition, Volt Active Data will look for another rack for the next replica instead of putting more than one replica on the same rack. In addition to awareness of node placement, the new algorithm inherits the optimization to maximize connections between nodes from the old algorithm.

To use the new feature, tell each Volt Active Data node of its placement by passing the command-line argument “–placement-group” to the voltdb command. Placement is not restricted to racks only; it can be anything that physically or logically separates nodes. The database can also contain more than one level of placement. For example, if you have nodes in multiple rows of racks in a datacenter, you can use “dc1.row2.rack9.nodeA” to denote the placement group of a node. Each level of placement is separated by a dot. By default, all nodes belong to the same group.

Once all nodes are started with the corresponding placement group, partition replicas will be assigned to different groups. In the event of a rack failure due to power loss or switch malfunction, the other racks will still contain at least one replica for any partition, keeping the cluster running.

Rack-awareness was introduced in Volt Active Data v5.5. Learn more about our current version at our docs site.