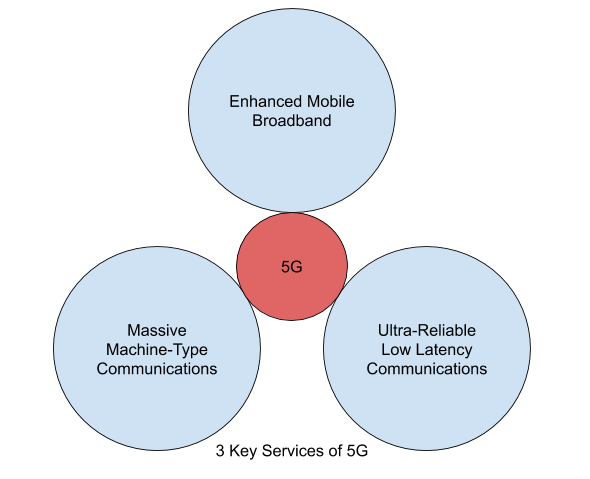

A lot of the mainstream media coverage of 5G revolves around the potential benefits for the individual consumers, such as AR/VR gaming. But the truth of the matter is that as analysts have observed and projected, CSPs are acutely aware that the premium revenues are not going to be from individual subscribers but from enterprises that are embarking on a journey to participate in the Fourth Industrial Revolution. 5G services such as massive machine-type communications (mMTC), and ultra-reliable low latency communications (uRLLC) are pointing towards connected enterprises being the key beneficiaries of 5G advances.

For Enterprises to truly take advantage of these services of 5G, it is imminent that they go through a true digital transformation. Digital transformation is not to be confused with simple digitalization of existing processes. To do justice to any digital transformation exercises, an organization must consider rearchitecting its business processes to leverage machine-to-machine communication to automate processes, decisions, and actions, thus eliminating expensive/catastrophic human errors while reducing the time to value from the point of event data generation closer to event time. The critical dots to connect to complete this picture are data, business rules, decisions, and actions.

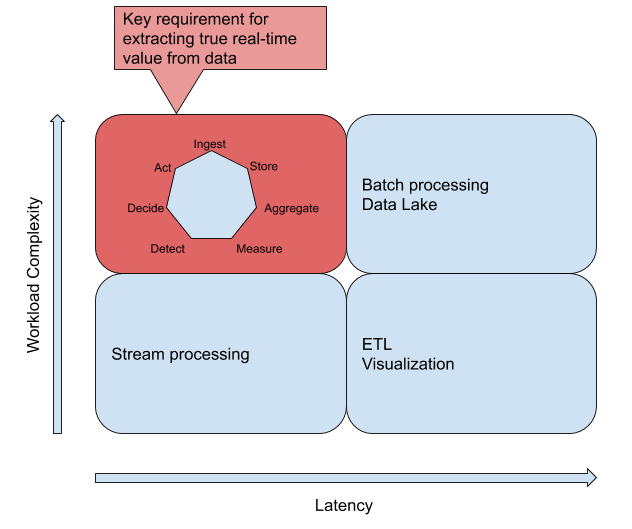

Enterprises that look to take their competitiveness to the next level based on the speed and latency that 5G avails them are realizing that there needs to be a fundamental shift in the expectation of what real-time entails. The definition of real-time is based on two key factors:

- Latency: How much time has passed from when the event that generated the data before the value is extracted from the data point

- Workload: What needs to be done within that latency to extract relevant value from the data to respond meaningfully to the event.

As exhibited in the above diagram, a true event-driven architecture demands more than event-driven stream processing for simple aggregation and joins. A true real-time workload requires at a minimum 7 broad steps: ingestion, storage, aggregation, key performance indicators measurement, detection of any exception indicators (deviations), decision making to handle these complex deviations and invocation of appropriate actions (monetization or threat prevention) based on the outcomes of the decision making step. All this needs to happen within 10 ms or less. If you miss this window, you are entering the reconciliation zone in the best case. Worst case scenario, the data sits and consumes your infrastructure and nobody knows its existence, thus becoming the nightmare of dark data.

Now how does new behavior of systems or humans affect the decisions being made? It is important for this real-time processing platform not just to handle the streams of data but also feed prepared retraining data to the machine learning layers to digest and create new insights. Once created these new insights need to be incorporated into the decision-making process. This completes two cycles that need to run in parallel:

- One cycle is the event to action cycle which Gartner refers to as a real-time control loop

- The other cycle is the event-learn-decide cycle that brings streaming data and big data together to manage false positive and negative scenarios handling

Volt Active Data, which started its life as a ground-up re-architected in-memory database, through learning from our customers and partners, has transformed into a holistic real-time data value management platform that handles both these cycles together in one fluid environment. If any of these resonate to where you are in your data value extraction journey and you are interested in learning more about if and how Volt Active Data can accelerate your journey, feel free to DM me on Twitter @dremella or on LinkedIn

Learn more at our upcoming webinar, “The Hidden Inflection Point in 5G: When the Changing Definition of Real-Time Breaks Your Existing Tech Stack“.