Table Of Contents

Need 640K TPS and perfectly accurate running totals? Volt Active Data has the answer.

But it’s not what you’d expect…

Anyone who has worked in the OLTP RDBMS space for any length of time will have noticed that heavy OLTP workloads don’t sit well with heavy summarization/COUNT (*)/running total workloads.

The root cause of this is pretty simple — getting accurate running totals involves creating a read consistent view of the data at the point in time the query was issued. But what if my read consistent view is of thousands of rows which are themselves constantly changing? In that case the work the server has to do goes up dramatically, and you rapidly get to the point where the queries take several seconds to run, and thus return results which are accurate reflections of the world, but as of several seconds ago. This may not be what you want.

The analysts have focused on this problem space, Gartner referring to it as “HTAP” and Forrester using the term “Translytics”. This is a far from trivial problem to solve. Since it’s not economic to count everything, every time, you need to come up with a way of keeping running totals that doesn’t itself turn into a massive bottleneck. While here at Volt Active Data we don’t scream “HTAP” and “Translytics” from the rooftop, we’re actually very good at doing this stuff.

Example: A Fantasy Sports League

Suppose you are registering people for fantasy sports, and your traffic arrives in a massive surge as everyone all tries to sign up at the same time. Your schema will look something like this:

We’re going to be getting messages asking to register customer ‘x’ for contest ‘y’. Contests have anything up to 1 million entries – we have a fantasy cricket customer in India that sees this. Our challenge is that each contest has a maximum number of entrants, and our goal is to register exactly that many without any delays or overallocations. We can expect around 30K registration attempts per second.

The pseudo logic will look something like this:

- Make sure the contest they want to sign up for exists.

- Find out how many entries are currently in the contest in question.

- If less than the limit for that contest, create an entry.

Anyone who’s worked with relational databases for any length of time will see the problem with step #2 – we’re being asked to count the number of entries in a contest every time we add one. While we’ve got a view called ‘contest_entry_count’ that tells us how many there are common sense tells us there’s no way you can query it 30,000 times a second. Unless you’re using Volt Active Data, in which case it works. Why?

In a ‘traditional’ database a “View” is a “SQL statement in disguise” – if you were lucky the SQL parser would take your statement and merge it with the view’s SQL statement and then execute it. If you were unlucky it might execute the view’s SQL, put the results in a temporary table and then call the SQL. Neither of these approaches works well when the view SQL is inherently expensive, like ‘contest_entry_count’ above.

Volt Active Data treats views very differently. Note that in the DDL above ‘contest_entry’ is partitioned on contest_id. This means that all the entries for a given contest will be in the same partition. A Volt Active Data database usually has eight or more partitions, with the exact number being determined by number of servers, high availability requirements, available CPU cores etc. But for our purposes we can assume 8. Volt Active Data handles the task of deciding which data belongs to which partition for you. Now a key thing here is that each partition has a dedicated CPU core ‘minding’ it. Only that core gets to touch anything inside that partition. Because there’s only one core managing the partition, keeping running totals suddenly becomes a lot simpler, as there is nobody else messing with the data at the same time. In practice this means that creating a view on a Volt Active Data table creates an extra CPU overhead of between 1 and 3% every time you change the table the view’s based on, but reading the view involves getting totals that already exist and is thus really fast.

The Volt Active Data server code to do this is shown below, and is available here:

As you can see, the flow of logic maps fairly neatly onto the pseudo-code we described above, with the parameters being passed to the ‘run’ method which then does the required interactions with the database. The key point is that the query of the view (‘getContestEntryCount’) does not slow things down, with the statement taking an average of 0.01ms to run.

To measure performance we did some tests on an a 3 node AWS z1d.2xlarge cluster, with command logging/snapshots enabled and 2 copies of every data item (‘k=1’). z1d is interesting, in that it has a small number of very fast CPU cores. A 3 node cluster of z1d.2xlarge costs US$2.49/hour.

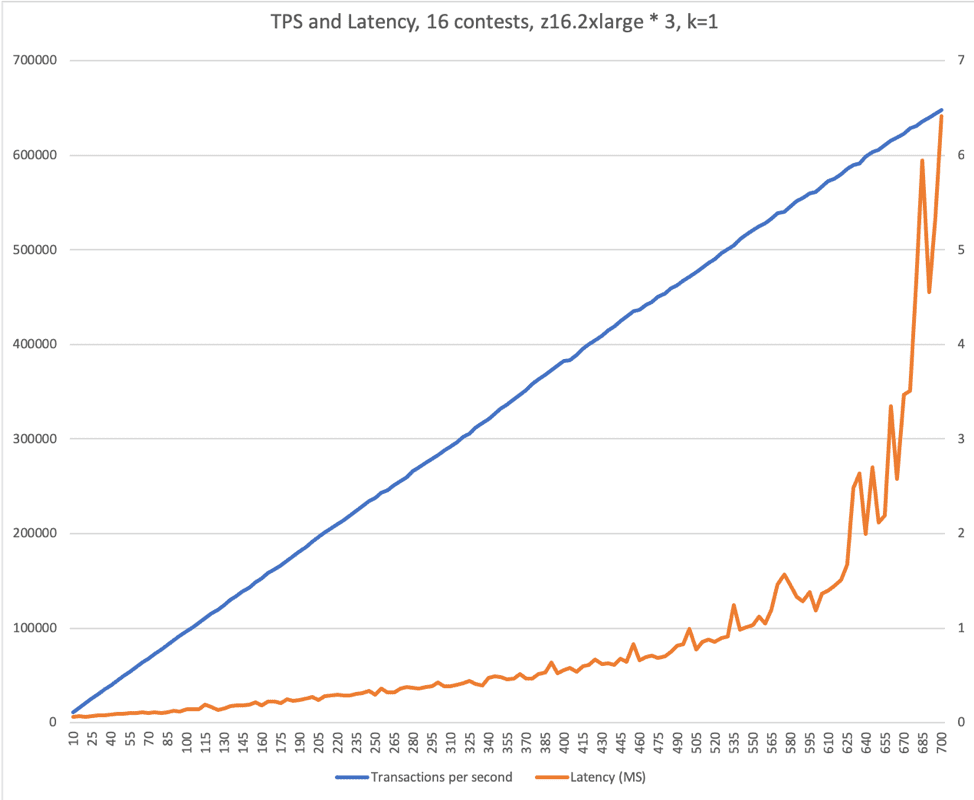

Test 1: 16 Contests

Customers: 3,000,000

Contests: 16

Contest size: 40,000

Method: starting from 10K TPS increase throughput in 5K transactions per second increments until the 95th percentile latency > 5ms.

We were able to scale to 640K TPS (blue line) with a latency of around 3ms (orange line), with an even distribution of contests. The sudden spike at the end is caused by us running out of CPU.

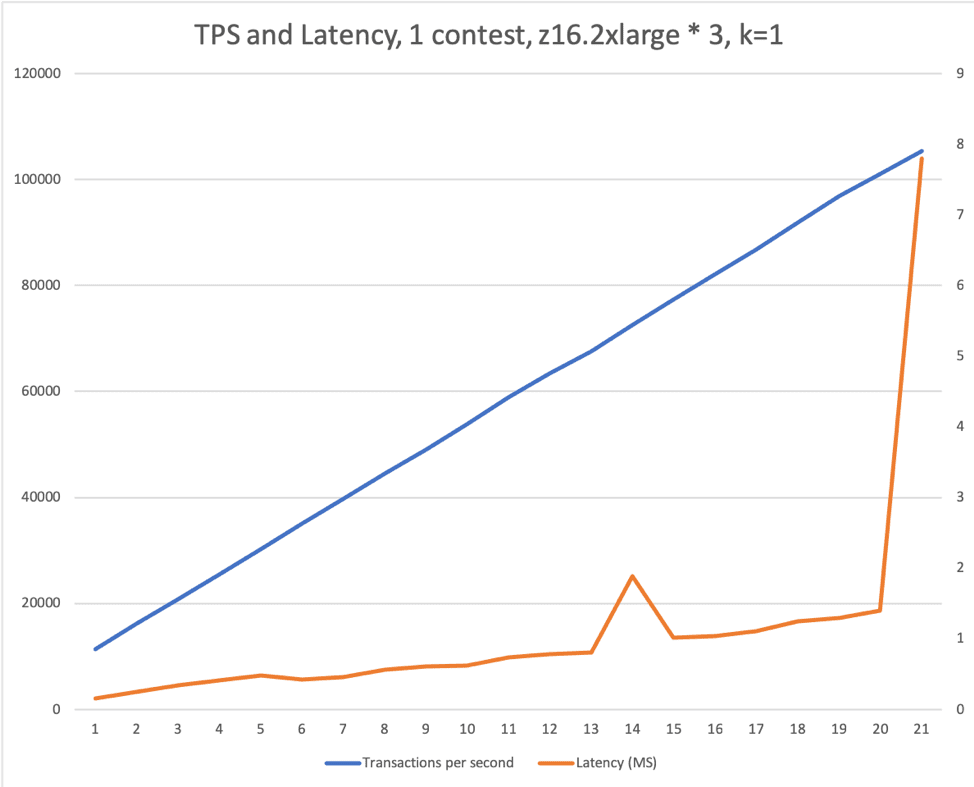

Test 2: 1 Big Contest

Customers: 3,000,000

Contest size: 1,000,000

Method: Starting from 10K TPS increase throughput in 5K transactions per second increments until the 95th percentile latency > 5ms.

The nature of this test means that all the work for a given contest will be channeled through a single partition in Volt Active Data. We steadily increased the load until 95th percentile latency was > 5ms, by which time we were able to register 105K entrants per second.

The code is available on Bitbucket.

Conclusion

While not ‘officially’ a HTAP/Translytics product Volt Active Data’s architecture is very good at workloads which involve counting/running totals. While the ‘traditional’ Volt Active Data user has been someone trying to accurately keep track of per-user totals such as bandwidth usage or mobile phone credit, applications such as Fantasy Sports can also benefit.

Note:

Source data for the graphs is on Bitbucket.